The Hadoop Distributed File System

HDFS is a distributed file system which is designed to overcome some of the limitations of other file system like NFS (Network File System) which Unix Solaris, Mac Os uses to name a few. Some of the distributed computing features which HDFS possesses are:

- Deals with huge amount of data i.e. terabytes and petabytes

- As machines in big data are commodity hardware there is risk of machine failure, so data is stored reliably in multiple machines to make data available if individual machines in clusters go down.

- HDFS is build to server large number of client request parallel, so we can easily add machines if needed and introduce it to HDFS which makes it scalable.

- Hadoop is capable of detecting faults within cluster and automatic recovery.

Design of HDFS is derived from Google File System (GFS) which you can read it here

HDFS is assembled by joining multiple hard drives of multiple machines. In reality HDFS does not have different occupied space, it is just a virtual space created from different machines. People often assume that the file system which a Linux machine has and a hadoop file system both are separate, but the point to be noted is both are same.

HDFS uses Master/Slave architecture where master controls multiple slaves. Master is called Name Node and Slaves are called Data nodes in Hadoop. As it is said HDFS is a block structured file system each file which is stored in HDFS is broken into blocks/chunks of fixed size. The default size of block is 64 MB but at the same time is not mandatory to keep 64 MB as block size we can tweak it depending on our requirement. While tweaking the block size we should keep in mind that a very small block size will increase seek time to read file and making large block size we will lose the parallelism while reading a file.

WROM

HDFS is Write Once and Read many. The theory behind is WORM is usually in big data space there are huge data sets available in form of logs, feeds, transactions, social media etc. Out of that we might be interested in only some number of fields to perform some analyzing on top of that fields. So we do not perform ETL (Extract, Transform and Load) which typical RDMS and SQL do. It is foolishness to transform the data while writing, assuming there are 40 to 50 fields in a single record and we will be interested in 3 or 5 fields for analyzing our needs. So ideally we should dump the data as it is i.e. Write once and then run our map reduce jobs, scripts, algorithms etc on the fields which we are meaningful i.e. read multiple times depending on our needs.

Hadoop cluster is useless until it has no data, so we’ll begin by loading our huge File.txt into the cluster for processing. So let us understand how HDFS writes data

Writing data into HDFS

Your Hadoop cluster is useless until it has no data, so we’ll begin by loading our huge File.txt into the cluster for processing. The goal here is fast parallel processing of lots of data. To accomplish that we need as many machines as possible working on this data in parallel.

The Client is ready to load File.txt into the cluster and breaks it up into blocks (in our ex. we assume 1 GB block size, just hypothetical), starting with Block A. The Client consults the Name Node that it wants to write File.txt, and receives a list of 3 active Data Nodes for each block, a unique list for each block. The Name Node internally uses its Rack Awareness data to decide as to which Data Nodes to provide in these lists. The key rule which Name node follows is that for every block of data, two copies will exist in one rack, another copy in a different rack. So the list provided to the Client will follow this rule.

Let’s understand the chain of communication happening between Client, Name Node and Data Node. When the Client wants to write Block A of 1 GB into HDFS it wants to know that all Data Nodes which are expected to have a copy of this block are alive and ready to receive it. Then Client picks the first Data Node in the list for Block A i.e. Data Node 1, opens a TCP 50010 connection and says, “Buddy get ready to receive a block, and here’s a list of two Data Nodes, Data Node 3 and Data Node 8. Go make sure the other two data nodes are ready to receive this block too.” Data Node 1 then opens a TCP connection to Data Node 3 and says, “Hey, get ready to receive a block, and go make sure Data Node 8 is ready to receive the block too.” Data Node 3 will then ask Data Node 8, “Hey, are you ready to receive a block?”

The acknowledgments of job completeness come back on the same TCP, Data Node 8 sends Finish message to Data Node 3 and Data Node 3 sends Complete to Data Node 1 and then finally Data Node 1 sends a “Finish” message back to the Client. At this point the Client is ready to begin writing next block data into the cluster.

Couple of points to note here:

- Data is kept in different racks. To keep two copies in one rack and one copy in another rack ensures if any entire rack fails we still have one copy in another rack. Also we are ensuring data locality for other hadoop jobs by keeping 2 blocks in one rack. Also to note corruption of rack or rack going dead is very rare possibility.

- Another reason to keep two blocks in same rack is to achieve high throughput while reading data because two machines in same rack have more bandwidth and lower latency.

- Client does not send blocks to all 3 data nodes identified by Name node. The reason is Client will be choked by data transmission at a time. That’s the reason it Client sends blocks to Data Node 1, then its Data Node 1’s headache to contact and transmit Data Node 8.

- Each Data node saves the block and along with each block a check sum will be saved. These check sum will be verified while reading blocks from HDFS to ensure block is completely read and detecting corrupted blocks.

- Name Node creates metadata from block reports received from data nodes

Reading data from HDFS

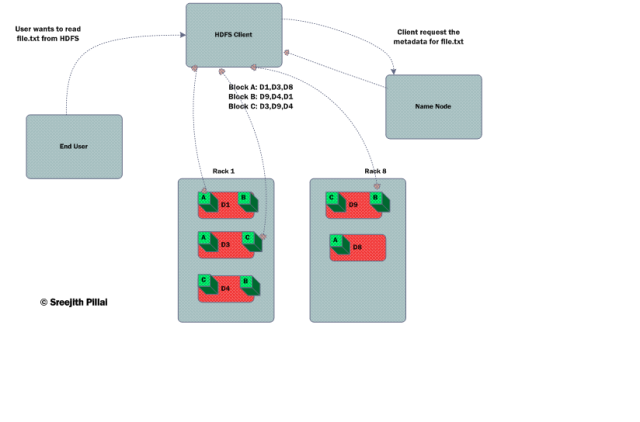

Reading data from HDFS is very simple process. When a user wants to read data from HDFS, it gives read command to Client. Client consults the Name node asking for the block metadata for particular requested file. The Name node returns a list of each Data node for each block.

Client gets metadata list of particular file from Name node. After that Name Node picks each block and reads one block at a time with TCP. In our case Data node reads D1 for Block A, D9 for Block B and D3 for Block C. Client starts reading data parallel from all three Data nodes and once it gets all the required file blocks it will assemble these blocks to form a file. If for ex. while reading Block A from D1 Client finds that data block is corrupted while verifying the checksum, it will switch to D3 from the list and read Block A from that.

Name Node also checks Rack awareness to provide optimal network behavior. For ex. Client is installed in a Data node hence if Data node consult Client for reading files, Name node will check if another Data node in the same rack has the data. If so name Node will give those lists of Data nodes. This ensures network transmission is minimal and data can be retrieved faster because network traffic doesn’t take place.

Reblogged this on Big Data Noir.

LikeLike

Good explanation

LikeLike

what is called a client machine here

LikeLike

https://stackoverflow.com/questions/43221993/what-does-client-exactly-mean-for-hadoop-hdfs

LikeLike

what happens if Data Node 8 was not responsive during read process ?

LikeLike